Research Background

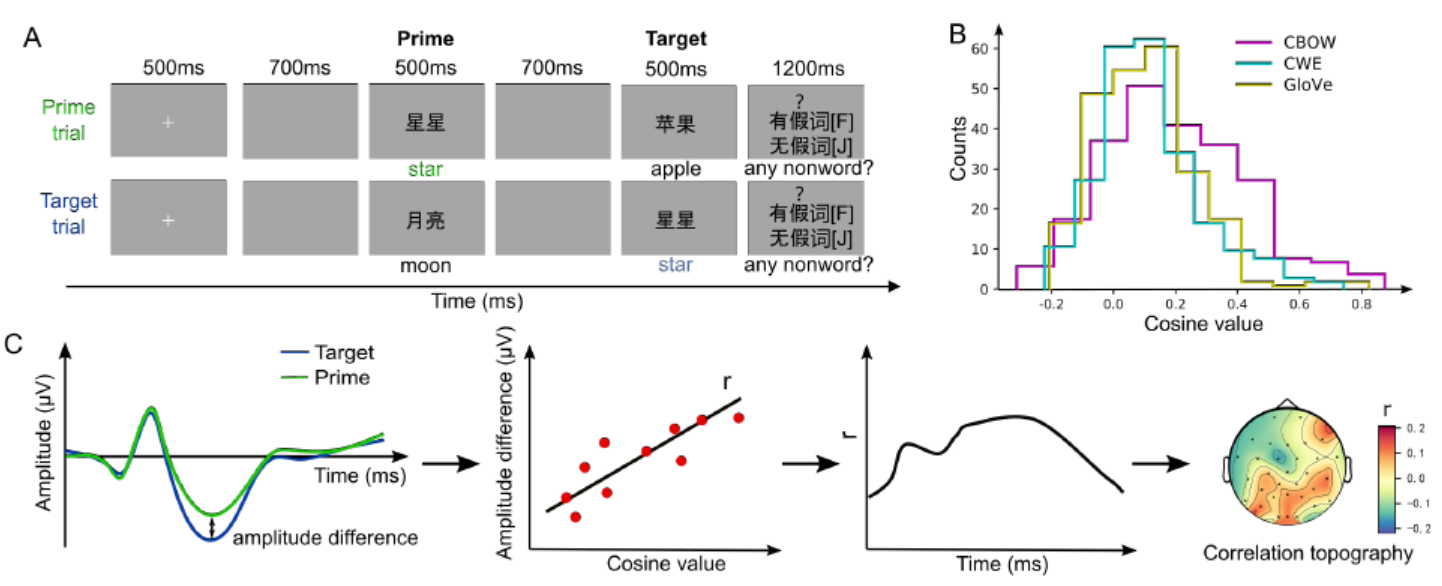

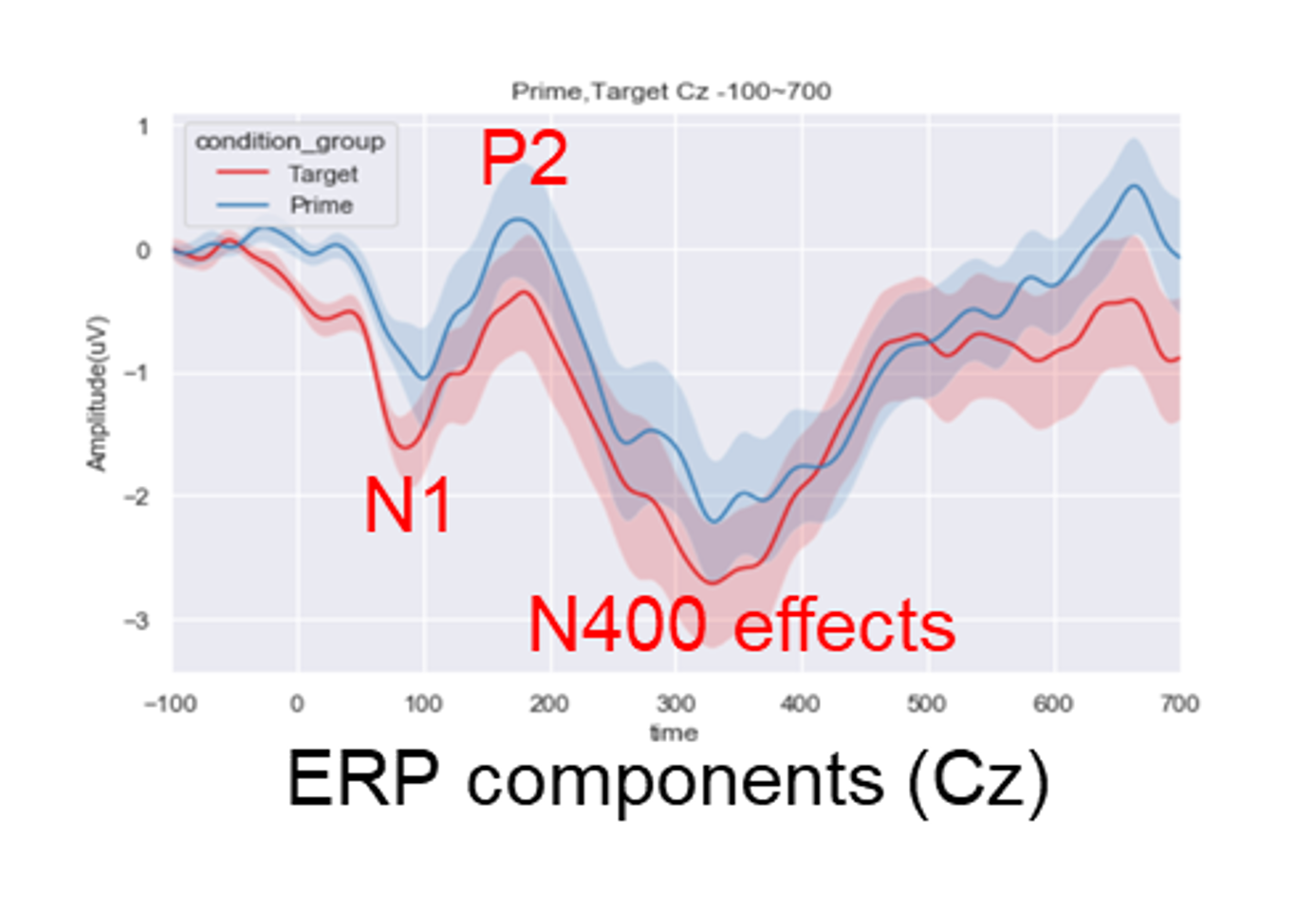

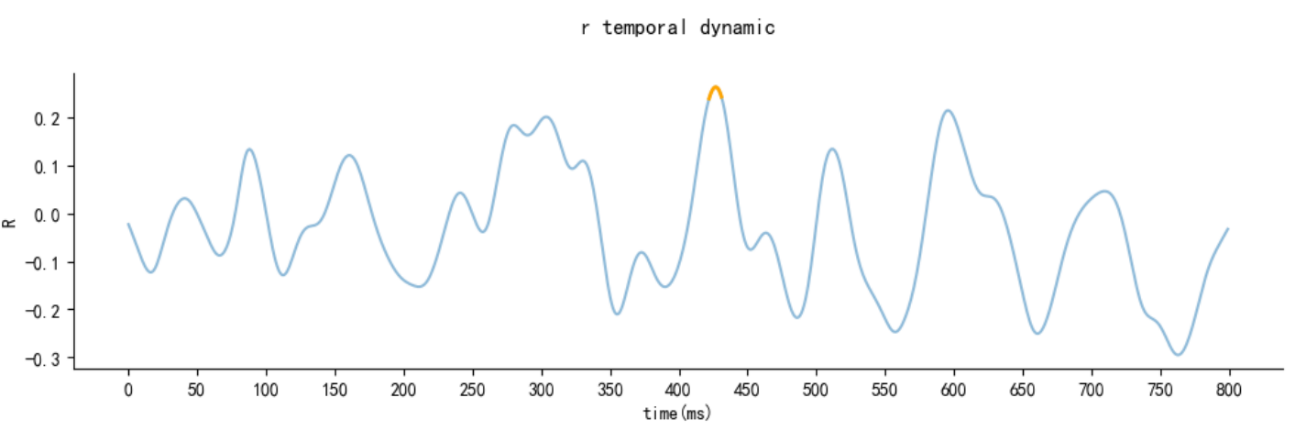

Semantic representation has been studied independently in neuroscience and computer science. A deep understanding of human neural computations and the revolution to strong artificial intelligence appeal for a joint force in the language domain. We investigated comparable representational formats of lexical semantics between these two complex systems with fine temporal resolution neural recordings. We found semantic representations generated from computational models significantly correlated with EEG responses at an early stage of a typical semantic processing time window in a two-word semantic priming paradigm. Moreover, three representative computational models differentially predicted EEG responses along the dynamics of word processing. Our study provided a finer-grained understanding of the neural dynamics underlying semantic processing and developed an objective biomarker for assessing human-like computation in computational models. Our novel framework trailblazed a promising way to bridge across disciplines in the investigation of higher-order cognitive functions in human and artificial intelligence.

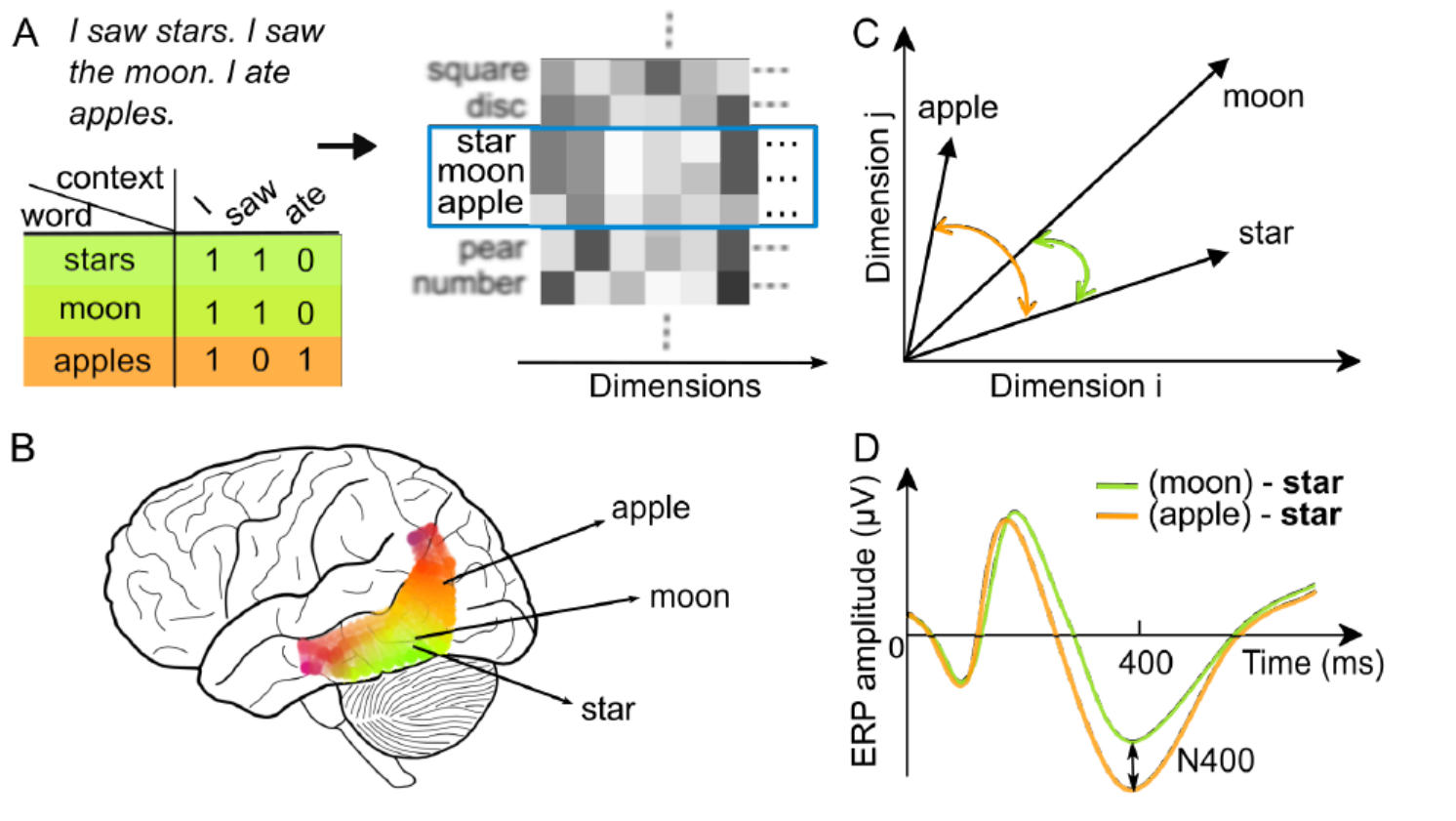

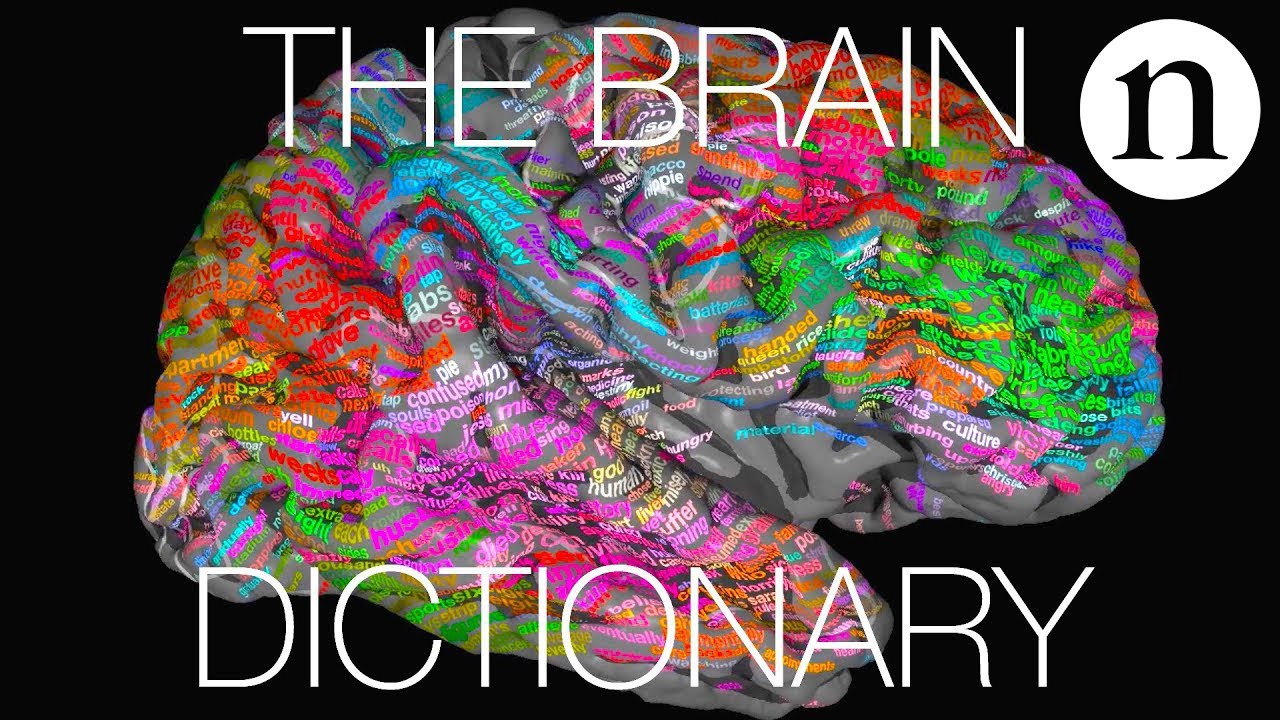

Figure 2.1 The Brain Dictionary. Adapted from Huth et al., 2016.